The Evolution of Language Models - and how we are all AI Experts...

Language models have undergone remarkable advancements in recent years, transforming from mere dreamlike concepts to powerful tools that aid us in various domains. These advancements have been primarily fuelled by artificial intelligence (AI) research and the availability of large datasets for training. In this blog post, we will explore the structure of Language Models (LLMs) and their exponential growth, alongside the rise of Adversarial Generative Networks (AGNs). We will delve into how LLMs are trained on vast datasets, the role of AGNs, and how LLMs utilise prompts to generate responses. Moreover, we will discuss the potential of LLMs in processing real-time information and the ethical implications associated with this newfound power.

Understanding Language Models (LLMs)

Language Models are sophisticated artificial intelligence systems designed to generate coherent and contextually relevant text based on given prompts or contexts. They have found applications in various domains, including natural language processing, chatbots, language translation, content generation, and more. LLMs have gained significant attention due to their ability to understand and generate human-like text.

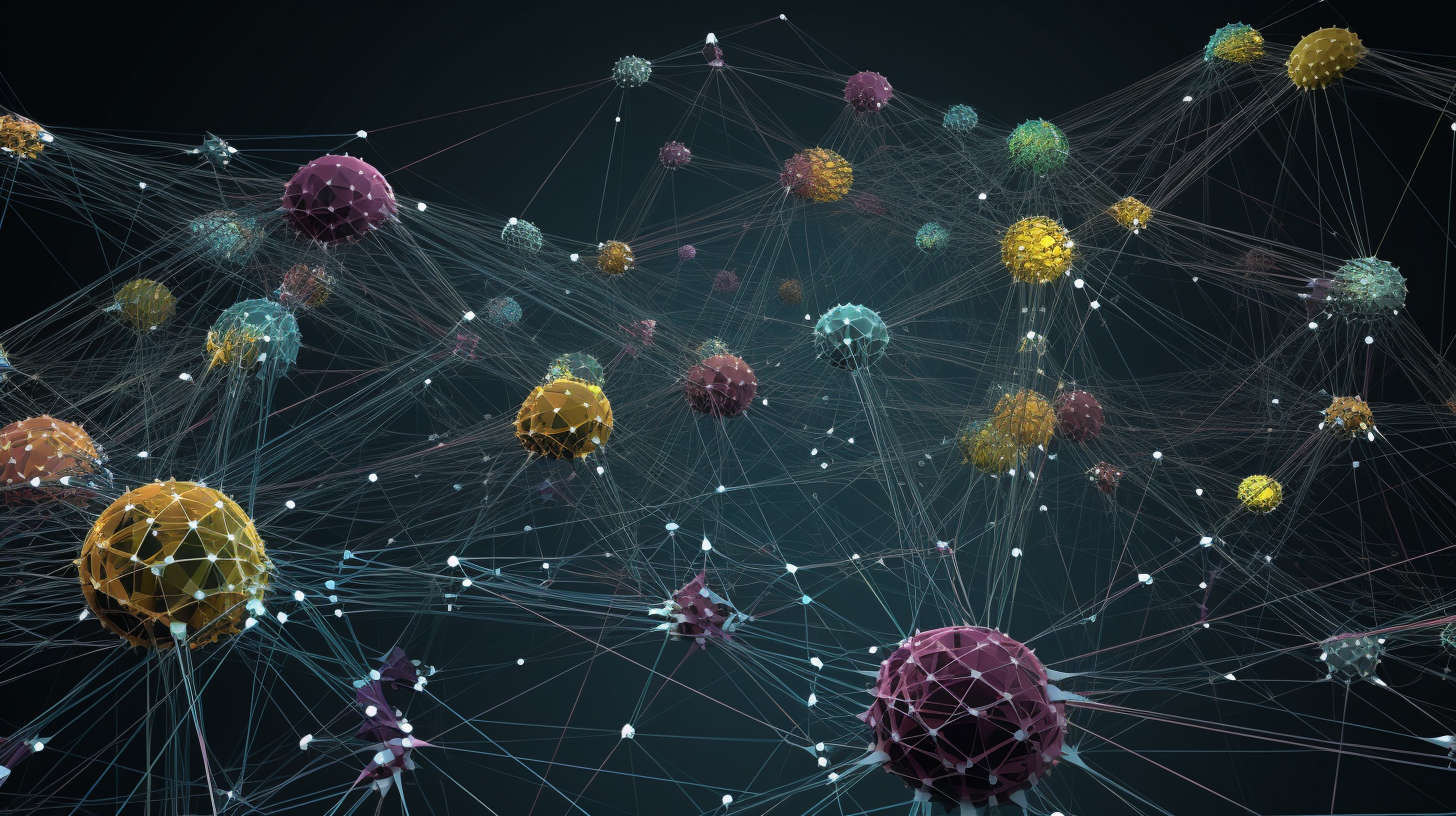

At their core, LLMs are deep learning models that employ advanced neural network architectures, such as recurrent neural networks (RNNs) or transformers. These models learn the statistical properties and patterns of natural language by training on vast amounts of text data. The purpose of LLMs is to predict and generate sequences of words that follow the patterns learned during training.

LLMs have found diverse applications across numerous domains:

Natural Language Processing

LLMs can perform tasks like text classification, sentiment analysis, named entity recognition, and part-of-speech tagging.

Chatbots and Virtual Assistants

LLMs enable the development of conversational agents that can provide human-like responses in real-time interactions.

Language Translation

LLMs have been used to improve machine translation systems, facilitating communication across different languages.

Content Generation

LLMs can generate articles, stories, code snippets, and other forms of textual content.

Question Answering Systems

LLMs can understand and answer questions based on a given context or knowledge base.

LLMs typically consist of the following components:

Embedded Layer

Converts words or tokens into continuous vector representations (word embeddings) that capture semantic and syntactic information.

Hidden Layers

Comprise multiple layers of neural networks, such as RNNs or transformers, which process the embedded input and capture contextual dependencies.

Attention Mechanisms

Enable the model to focus on relevant parts of the input sequence by assigning different weights to different tokens.

Decoding Layer

Generates the output sequence by predicting the likelihood of each possible word based on the learned patterns and context.

eTraining Mechanism

LLMs are trained using large datasets with unsupervised learning approaches, such as maximum likelihood estimation, to optimize the model's parameters.

The architecture of LLMs has evolved significantly, with transformers being widely adopted due to their ability to capture long-range dependencies and parallel processing capabilities.

LLMs are powerful AI systems that can understand and generate human-like text based on given prompts or contexts. By training on large datasets and leveraging advanced neural network architectures, LLMs have emerged as versatile tools with applications in natural language processing, chatbots, language translation, and content generation. Understanding the components and architecture of LLMs provides a foundation for exploring their training methods, response generation, and the ethical implications associated with their usage.

Training Language Models

Training Language Models is a crucial step in their development, enabling them to learn the statistical properties and patterns of natural language. By leveraging large datasets and employing unsupervised learning techniques, LLMs become proficient in generating contextually relevant and coherent text.

LLMs rely on large-scale datasets to capture the intricacies of language. These datasets consist of vast collections of text from diverse sources such as books, articles, and websites. By training on extensive and diverse data, LLMs can understand syntactic structures, semantic relationships, and contextual nuances. The availability of large datasets allows LLMs to generalise their understanding and produce high-quality text generation.

Unsupervised learning techniques play a pivotal role in training LLMs. One popular approach is autoregressive language modelling, where LLMs predict the next word in a sequence based on the preceding context. By iteratively predicting subsequent words across the dataset, LLMs develop an internal representation of language, capturing the statistical dependencies and patterns within the text. This unsupervised learning process empowers LLMs to generate coherent and contextually appropriate responses.

The training process of LLMs involves a two-stage approach: pre-training and fine-tuning. In the pre-training stage, LLMs are trained on large datasets using advanced neural network architectures like transformers. Pre-training enables LLMs to learn the global context and dependencies within a given sequence. Fine-tuning follows pretraining and involves training the pre-trained LLMs on task-specific data. This fine-tuning process allows LLMs to adapt their knowledge and capabilities to specific downstream tasks or domains.

By combining pre-training with large-scale datasets and fine-tuning task-specific data, LLMs exhibit remarkable capabilities in generating coherent and contextually relevant text. The availability of extensive text datasets, coupled with unsupervised learning and fine-tuning, empowers LLMs to understand and generate human-like text.

Understanding the training process of LLMs is essential for grasping their capabilities and potential. By training on large datasets, utilising unsupervised learning techniques, and fine-tuning on task-specific data, LLMs can generate high-quality text that aligns with human language patterns. This training process forms the foundation for LLMs to excel in various applications, such as natural language processing, chatbots, language translation, and content generation. As the field of LLMs continues to evolve, advancements in training methodologies will further enhance their language understanding and generation abilities.

Adversarial Generative Networks (AGNs)

In recent years, the field of artificial intelligence has witnessed significant advancements in the development of Adversarial Generative Networks (AGNs). AGNs, also known as Generative Adversarial Networks (GANs), have revolutionized the generation of realistic and high-quality synthetic data, including text. This section will explore the concept of AGNs, their application in language generation, and their role in the evolution of language models.

Introducing Adversarial Generative Networks (AGNs)

AGNs are a class of neural network architectures that consist of two main components: a generator and a discriminator. The generator aims to generate synthetic data, while the discriminator's role is to distinguish between real and synthetic data. AGNs operate in a competitive manner, where the generator continually improves its ability to generate realistic data by fooling the discriminator, while the discriminator strives to become more proficient in distinguishing between real and synthetic data.

The adversarial training process of AGNs involves iteratively updating the generator and discriminator networks. This training process enables AGNs to learn the underlying data distribution and generate synthetic samples that closely resemble the real data. AGNs have shown remarkable success in various domains, including computer vision, natural language processing, and text generation.

AGNs in Language Generation

AGNs have made significant contributions to the field of language generation. They have been applied to tasks such as text synthesis, text completion, and text style transfer. By training on large-scale text datasets, AGNs can capture the statistical properties and patterns of natural language and generate coherent and contextually relevant text.

In language generation tasks, the generator component of AGNs learns to produce realistic and coherent text samples, while the discriminator component evaluates the quality and authenticity of the generated text. Through the adversarial training process, AGNs can refine their language generation capabilities, leading to more convincing and human-like text output.

The Evolution of Language Models with AGNs

The integration of AGNs into the training and generation processes of language models has contributed to the exponential evolution of LLMs over the past few years. By combining the power of large datasets and unsupervised learning with AGNs' ability to generate realistic text, LLMs have achieved significant advancements in language understanding and generation.

AGNs provide LLMs with the capability to generate text that is not only coherent but also exhibits properties similar to real-world text. This advancement has paved the way for more natural and contextually appropriate responses from LLMs, making them increasingly useful in applications such as chatbots, content generation, and dialogue systems.

With AGNs, LLMs have the potential to generate highly realistic and diverse text samples, simulating different writing styles, and genres, or even emulating specific authors. This opens up exciting possibilities for creative writing, content generation, and personalized user experiences.

As the next section explores the technical workings of LLMs in more detail, we will gain further insights into how they process and generate text based on prompts and leverage AGNs to enhance their capabilities. Understanding these advancements is crucial as we examine the potential benefits and risks associated with the evolving landscape of LLMs in the following sections.

Generating Responses with Language Models

Language Models have evolved to become powerful tools for generating responses in natural language. With the advancements in training techniques and the integration of large datasets, LLMs can generate coherent and contextually relevant text based on given prompts. In this section, we will explore the technical workings of LLMs, how they process prompts, the role of attention mechanisms, and the potential for real-time information processing.

When interacting with an LLM, the process begins by providing a prompt, which serves as the input to the model and initiates the response generation process.

LLMs utilize their internal architecture, often based on transformers, to process the input sequence. Transformers excel at capturing long-range dependencies and contextual relationships within the text. One key component of transformers is the attention mechanism, which allows the model to focus on relevant parts of the input sequence while generating the response.

Attention mechanisms in LLMs enable the model to assign different weights to each token in the input sequence based on their importance and relevance. This attention weight distribution helps the model capture contextual information and generate more accurate and coherent responses.

To understand attention mechanisms in LLMs, let's consider an example. Suppose we have the prompt "Once upon a time" as our input. The tokenizer converts this prompt into a sequence of tokens, such as ["Once", "upon", "a", "time"]. Each token is associated with an embedding, which represents its meaning in the context of the input.

As the input sequence propagates through the transformer layers, the LLM utilizes attention mechanisms to calculate attention scores between each token. These attention scores determine how much importance the model should assign to each token when generating the response.

For instance, when generating the next word in the response, the attention mechanism might assign higher weights to tokens like "upon" and "time" if they are more relevant to the context. By focusing on these tokens, the LLM can generate more contextually appropriate and coherent responses.

The attention mechanism enables the LLM to capture dependencies between tokens that are separated by long distances in the input sequence. This long-range dependency modelling helps the model understand complex sentence structures and generate responses that maintain coherence.

To illustrate the prompt-based response generation process using attention-based LLMs, let's consider a code example using the Transformers library in Python:

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Load pre-trained model and tokenizer

model_name = "gpt2"

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

# Set the model to evaluation mode

model.eval()

# Define a prompt

prompt = "Once upon a time"

# Tokenize the prompt

input_ids = tokenizer.encode(prompt, return_tensors="pt")

# Generate response

output = model.generate(input_ids, max_length=100, num_return_sequences=1)

# Decode and print the response

response = tokenizer.decode(output[0], skip_special_tokens=True)

print("Generated Response:", response)In this code example, we load a pre-trained language model (in this case, GPT2) and its corresponding tokenizer using the Transformers library. The model is set to evaluation mode.

We define a prompt, which serves as the initial text to generate a response. The prompt is tokenized using the tokenizer, and the numerical representations (input IDs) are obtained.

Next, we use the generate method of the model to generate a response based on the given prompt. We specify the max_length parameter to limit the length of the generated response and the num_return_sequences parameter to control the number of response sequences generated.

The generated output is in the form of token IDs. We use the tokenizer to decode the output and convert it back into human-readable text. Finally, we print the generated response.

This code example showcases the basic process of prompt-based response generation using the Transformers library. By modifying the prompt and adjusting the parameters, you can experiment with generating diverse and contextually appropriate responses.

By leveraging attention mechanisms, LLMs can assign varying degrees of importance to different parts of the input sequence, allowing for more accurate and contextually relevant responses. Attention mechanisms enable the model to capture long-range dependencies and generate coherent output.

In the next section, we will delve deeper into the potential applications, societal impacts, and associated challenges of LLMs, including the responsible deployment of these powerful language generation models.

Incorporating Conversational Context

Language Models have advanced beyond standalone prompt-based response generation. With the ability to leverage conversation history, LLMs can generate responses that are more contextually aware and maintain coherence within ongoing conversations. In this section, we will explore how LLMs incorporate conversational context, the techniques used to maintain context and coherence, and the improvements achieved in response generation through the use of conversational context.

One of the key challenges in generating responses within a conversational context is the need to consider the entire conversation history, including previous messages when generating a response. LLMs address this challenge by maintaining an internal state that represents the conversation history. This internal state allows the model to have a memory of the previous interactions and generate responses that align with the ongoing dialogue.

To maintain context and coherence, LLMs typically append the conversation history to the prompt as additional input tokens. This extended input sequence includes the previous messages exchanged in the conversation. By incorporating this context into the response generation process, LLMs can generate more contextually appropriate and coherent responses.

The conversation history serves as a valuable source of information for LLMs. It helps the model understand the context of the current prompt and generate responses that are tailored to the ongoing conversation. By considering the previous messages, LLMs can generate responses that address specific questions, refer to previous topics, or exhibit a consistent conversational style.

To improve response generation using conversational context, LLMs employ techniques such as attention masking or special tokens to distinguish between the conversation history and the prompt. These techniques ensure that the model attends to the relevant parts of the input sequence and appropriately balances the influence of the conversation history and the current prompt in generating the response.

By incorporating conversational context, LLMs can generate more meaningful and engaging responses. The model can refer back to earlier messages to provide informative and relevant replies, maintain a coherent dialogue flow, and adapt its responses based on the evolving conversation dynamics.

For example, imagine a conversation where the prompt is "What is the capital of France?" and the previous messages in the conversation history include "Paris is the capital of France." By incorporating the conversation history, the LLM can generate a response like "Yes, Paris is indeed the capital of France."

Incorporating conversational context not only enhances the quality of responses but also enables LLMs to participate in more interactive and dynamic conversations. These models can simulate human-like interactions by understanding the ongoing dialogue and generating responses that align with the context and intent of the conversation.

In the next section, we will explore the potential applications and the societal impact of LLMs, including both the benefits and challenges associated with their widespread use.

Real-time Processing and Ethical Implications

Language Models are evolving to be capable of processing real-time information, opening up new possibilities and raising ethical concerns. In this section, we will explore the potential of LLMs in real-time processing, the applications and benefits it offers, and the ethical implications and risks associated with this advancement.

LLMs traditionally operate on static prompts and generate responses based on the given input. However, advancements in technology have paved the way for LLMs to process real-time information. This means that LLMs can now dynamically incorporate up-to-date data and respond in real time to changes in the world.

The potential applications of real-time LLMs are vast and impactful. They can be leveraged in various domains, such as news and social media analysis, real-time customer support, and instant information retrieval. Real-time LLMs can analyze the latest news articles, social media posts, or customer inquiries, and generate timely and relevant responses, enabling instant information access and personalized interactions.

One of the major benefits of real-time LLMs is their ability to provide up-to-date information and insights. They can process and interpret the latest data, allowing users to make informed decisions based on the most recent information available. For instance, real-time LLMs can assist in monitoring stock market trends, analyzing real-time data feeds, or tracking emerging topics and events.

Real-time LLMs can also have positive societal impacts. They can facilitate quick and accurate dissemination of critical information during emergencies or crisis situations. By analyzing and summarizing real-time data from multiple sources, these models can help emergency responders, journalists, and the general public stay informed and respond effectively to rapidly evolving situations.

However, the advancement of real-time LLMs also raises ethical concerns and risks. One major concern is the potential misuse of these models for malicious intent. For example, real-time LLMs could be exploited for spreading misinformation or propaganda in an automated and targeted manner. This poses risks to public trust, democratic processes, and social stability.

Another ethical concern is privacy and data security. Real-time LLMs rely on access to large amounts of data, including personal information. Safeguarding the privacy of individuals and ensuring responsible data handling practices become crucial to prevent unauthorized use or abuse of sensitive information.

Moreover, the real-time nature of LLMs raises questions about accountability and transparency. As these models generate instant responses, it becomes challenging to understand and interpret the decision-making process behind the generated output. The lack of transparency can lead to biases, unfair practices, or unintended consequences that may go unnoticed or unchallenged.

Addressing these ethical concerns and risks requires a multifaceted approach. It involves developing robust mechanisms for detecting and mitigating malicious use, ensuring privacy protection and data governance, promoting transparency and explainability in model behaviour, and fostering responsible use and deployment of real-time LLMs.

As the capabilities of LLMs continue to advance, it is essential to strike a balance between harnessing the benefits of real-time processing and addressing the associated ethical challenges. This necessitates ongoing research, collaboration between stakeholders, and the establishment of ethical frameworks and guidelines for the development, deployment, and regulation of real-time LLMs.

In the next section, we will explore the potential future developments and directions of LLMs, including their role in shaping our interactions with technology and society.

Perspectives and Concerns

The rapid evolution of LLMs has garnered attention from various perspectives, including those within the AI industry, high-profile business people, and societal observers. While LLMs offer exciting possibilities, there are concerns and considerations that accompany their advancements. In this section, we will explore some of the perspectives and concerns surrounding LLMs and their implications.

From the AI industry's perspective, LLMs represent significant progress in natural language understanding and generation. Researchers and developers appreciate the advancements in training techniques, larger datasets, and the integration of attention mechanisms and transformers, which have propelled LLMs to new heights of performance. The ability to generate coherent and contextually relevant text has opened doors to a wide range of applications, including virtual assistants, chatbots, content generation, and more.

However, high-profile business people and industry leaders have expressed concerns about the potential misuse and unintended consequences of LLMs. Elon Musk, CEO of SpaceX and Tesla, has warned about the risks of artificial general intelligence and the need for responsible development. OpenAI, an organization at the forefront of LLM development, has also emphasized the importance of ethical considerations and responsible deployment to ensure LLMs serve the best interests of society.

One concern is the potential for LLMs to amplify biases present in the training data. LLMs learn from vast amounts of text data, which can inadvertently perpetuate societal biases, stereotypes, or discriminatory patterns. If not addressed, this can have negative impacts on various aspects of society, including fairness, diversity, and inclusivity.

Another concern is the ethical implications of generating highly realistic and deceptive text. LLMs can be used to create deepfake-like content or generate deceptive information that appears legitimate. This raises concerns about the spread of misinformation, propaganda, and the erosion of trust in online communication.

Furthermore, the increasing sophistication of LLMs raises questions about the future of human creativity and originality. As models become adept at generating text that resembles human writing, there are concerns that they might diminish the value of human-authored content or blur the boundaries between human and machine-generated works.

Societal observers express apprehensions about the potential societal impacts of widespread LLM use. The dissemination of AI-generated text can have significant implications for journalism, public discourse, and the democratization of information. The challenge lies in ensuring responsible deployment, transparency, and accountability to maintain the integrity of public conversations and avoid undue influence or manipulation.

Addressing these concerns requires a collaborative effort involving researchers, policymakers, industry stakeholders, and the wider public. Ethical guidelines, regulations, and responsible AI practices should be developed to ensure that LLMs are developed and deployed in a manner that aligns with human values, respects individual privacy and rights, and mitigates potential risks.

By considering the perspectives and concerns surrounding LLMs, we can foster a responsible and sustainable path forward. LLMs have the potential to revolutionize human-computer interactions and empower various domains, but their development and deployment must be accompanied by careful consideration of their implications to build a future where AI benefits society in a safe and equitable manner.

As we conclude, we will explore potential future advancements in LLMs, including their evolving role in real-time decision-making, their impact on various industries, and the ongoing efforts to address the concerns and challenges associated with these powerful language generation models.

Conclusion

Language Models have witnessed exponential growth and advancements over the past few years, transforming the way we interact with artificial intelligence and the potential applications in various domains. From their humble beginnings to the current state-of-the-art models, LLMs have come a long way in generating coherent and contextually relevant responses.

The concept of AI models aiding us was once just a dream, but recent advancements have turned that dream into reality. Through the training process on large datasets and the utilization of sophisticated techniques such as attention mechanisms and transformers, LLMs have become powerful tools for natural language understanding and generation.

The integration of Adversarial Generative Networks (AGNs) further enhances the capabilities of LLMs, enabling them to generate realistic and high-quality text. AGNs contribute to the creative aspect of language generation, enabling LLMs to generate diverse responses and expand their capabilities beyond traditional prompt-based approaches.

The process of generating responses with LLMs involves intricate steps, including tokenization, encoding, and decoding. These steps are powered by complex algorithms that analyze the input sequence, apply attention mechanisms, and generate meaningful output. By leveraging popular libraries like Transformers in Python, developers can build simple CLI applications for interactive LLM-based interactions.

Incorporating conversational context into LLMs allows them to generate responses that maintain coherence within ongoing conversations. By leveraging the conversation history, LLMs can refer to previous messages, address specific questions, and exhibit a consistent conversational style. Techniques like attention masking and special tokens help in maintaining context and improving the quality of generated responses.

Real-time processing capabilities bring a new dimension to LLMs, enabling them to analyze up-to-date information and generate instant responses. This has implications in various domains, including news analysis, customer support, and emergency response. However, with these advancements come ethical concerns and risks, such as the potential misuse for malicious intent, privacy and data security, and the lack of transparency and accountability.

The perspectives from the AI industry, high-profile business people, and societal observers highlight both the excitement surrounding LLMs' possibilities and the concerns about their ethical implications. Addressing biases, promoting transparency, and ensuring responsible deployment are critical factors in harnessing the potential of LLMs while mitigating the associated risks.

In conclusion, LLMs have evolved exponentially, from simple prompt-based models to sophisticated systems that can process real-time information and incorporate conversational context. While they offer remarkable opportunities for innovation and advancement, it is essential to approach their development and deployment with caution and responsibility. By actively addressing ethical concerns, fostering transparency, and promoting responsible AI practices, we can shape the future of LLMs to benefit society while minimizing potential risks.